CYBER CRIME CASES and CONFUSION MATRIX

New technologies create new criminal opportunities but few new types of crime. What distinguishes cybercrime from traditional criminal activity? Obviously, one difference is the use of the digital computer, but technology alone is insufficient for any distinction that might exist between different realms of criminal activity. Criminals do not need a computer to commit fraud, traffic in child pornography and intellectual property, steal an identity, or violate someone’s privacy. All those activities existed before the “cyber” prefix became ubiquitous. Cybercrime, especially involving the Internet, represents an extension of existing criminal behavior alongside some novel illegal activities.

DEFINITION

Cybercrime, also called computer crime, the use of a computer as an instrument to further illegal ends, such as committing fraud, trafficking in child pornography and intellectual property, stealing identities, or violating privacy. Cybercrime, especially through the Internet, has grown in importance as the computer has become central to commerce, entertainment, and government.

Most cybercrime is an attack on information about individuals, corporations, or governments. Although the attacks do not take place on a physical body, they do take place on the personal or corporate virtual body, which is the set of informational attributes that define people and institutions on the Internet. In other words, in the digital age, our virtual identities are essential elements of everyday life: we are a bundle of numbers and identifiers in multiple computer databases owned by governments and corporations. Cybercrime highlights the centrality of networked computers in our lives, as well as the fragility of such seemingly solid facts as individual identity.

What is a Confusion Matrix?

A confusion matrix is a table that is often used to describe the performance of a classification model (or “classifier”) on a set of test data for which the true values are known. The confusion matrix itself is relatively simple to understand, but the related terminology can be confusing.

It is a table with 4 different combinations of predicted and actual values.

Let’s understand TP, FP, FN, TN in terms of pregnancy analogy.

True Positive:

Interpretation: You predicted positive and it’s true.

You predicted that a woman is pregnant and she actually is.

True Negative:

Interpretation: You predicted negative and it’s true.

You predicted that a man is not pregnant and he actually is not.

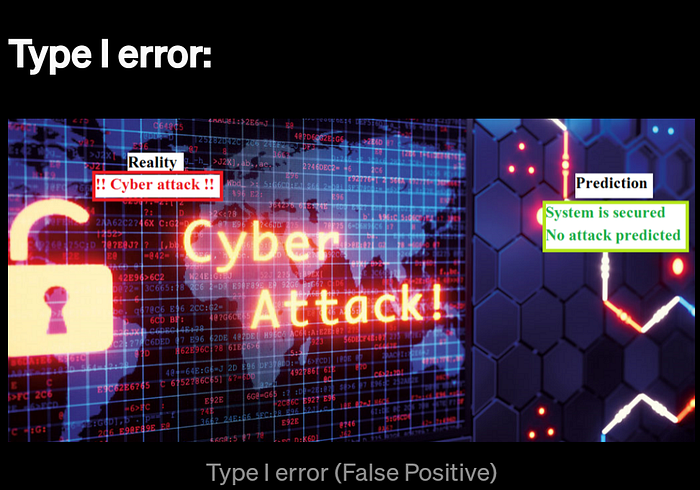

False Positive: (Type 1 Error)

Interpretation: You predicted positive and it’s false.

You predicted that a man is pregnant but he actually is not.

False Negative: (Type 2 Error)

Interpretation: You predicted negative and it’s false.

You predicted that a woman is not pregnant but she actually is.

Just Remember, We describe predicted values as Positive and Negative and actual values as True and False.

Type I error:

This type of error can prove to be very dangerous. Our system predicted no attack but in real attack takes place, in that case no notification would have reached the security team and nothing can be done to prevent it. The False Positive cases above fall in this category and thus one of the aim of model is to minimize this value.

Type II error:

This type of error are not very dangerous as our system is protected in reality but model predicted an attack. the team would get notified and check for any malicious activity. This doesn’t cause any harm. They can be termed as False Alarm.

CYBER CRIME CASE OF NETHERLANDS

1. INTRODUCTION

Recently, an article was published in the news about how the registered level of crime in the Netherlands has decreased to that of 1980. Although the number of crimes has decreased in the Netherlands, the ratio between the different types of crime has shifted. Due to the growth of the Internet and other technologies in the past 20 years, crime involving information and communication technologies (ICT) has increased significantly. In 2016, 11% of all Dutch residents were victimized by cybercrime1 . Only 8% of the victims filed a police report. In this paper, instead of using the term ‘cybercrime’, ‘a crime involving ICT’ is used to not only focus on the criminal court cases labeled as cybercrime, but to also be able to focus on criminal court cases where ICT played a role but which were not labeled as cybercrime. Not all crimes involving ICT are registered as such, as they may appear as computer-aided traditional crimes, or the involvement of ICT in the crime is ignored or the role of ICT in the crime is not explicitly mentioned. Consequently, the number of crimes involving ICT may be much higher than originally thought and might become more relevant to fight and prevent. Therefore, it is interesting to investigate ICT involvement in crimes.

2. RELATED WORK

Some research is done on text mining and machine learning with crime detection, but not with criminal court cases as a dataset. A master’s thesis on text classification of Dutch police reports in which they try to find out if police reports can be classified through text mining, relates to this research topic but has made use of police reports instead of criminal court cases [4]. Androutsopoulos et al. compare in their research a Naïve Bayesian filter and a keyword-based filter, from which they conclude the viability of automatically trainable anti-spam filters [2]. In this research a Naïve Bayesian classifier is trained to automatically detect ICT involvement in criminal court cases. A study done by Wang et al. on automatic document classification uses the Bayes’ theorem as a basis for the algorithm to classify web documents [18]. One of the conclusions, which was consistent with earlier research, was that the multivariate Bernoulli event model performed worse than the multinomial event model classifier. This could be of interest for this research since it makes use of the Bayes’ theorem for classifying the court cases. There seem to be no studies that use the combination of Naïve Bayes as an algorithm and criminal court cases as a dataset, therefore the proposed research can create new insights.

3. Pre-processing of the Data

As the files were downloaded in an .xml format, they needed to be stripped of the XML-tags first, which resulted in a text-only string. This string then needed to be loaded into a data frame, so the learning algorithm could process it.

4. Calculating the accuracy

Next, the accuracy of the classification needed to be determined to measure how well the algorithm performed on the dataset.

The choice for this number is based on the low number of files per class. The K-Fold cross-validation enabled for calculating the f1_score for accuracy as well as creating a confusion matrix, which provides us more insight in the accuracy per class. The formula for the f1_score is as follows:

True positives and negatives, false positives and negatives can be put in a confusion matrix to show the performance of the classifier.

Binary confusion matrix

5. Confusion matrix and accuracy

The confusion matrix that was obtained from the classifier is depicted in Figure below. It is in normalized form, since the classes are imbalanced. The darker the blue, the better the classifier is at predicting files for this class. It is clear where the classifier gets ‘confused’. The ‘identity theft’ class does not seem to do well, which has a good reason. Through reading court cases, the discovery was made that ‘platform fraud’ is linked to ‘identity theft’, as it appears that stolen identities are often used to commit platform fraud. In the confusion matrix it is shown that ‘identity theft’ is often predicted as ‘platform fraud’.